| Client: | Georgia Tech Interactive Media Technology Center, Steelcase |

| Dates: | Aug 2014 – May 2016 |

| Skills/Subjects: | interaction design, Javascript, UI design, Usability testing |

Background

The Magic Window as a concept has existed in several applications for a while now, starting with Brian Davidson and Jeff Wilson. That concept is basically a live video stream controlled via gestures like hand movement, leaning your body, and face tracking. Its current form is as a web application to make use of WebRTC and other technical affordances of web browsers. It uses a Kinect to track users’ bodies in motion and a high-resolution fisheye video camera to capture everything in front of the display. Combined, it is a powerful tool that combines the latest technology to investigate how people would interact remotely given certain affordances.

Affordances are facets of a design that seem to ask for a user’s interaction without explicit instruction, such as the contours of a computer mouse or shape of a door handle suggest how to grasp it. How people notice and use those affordances are fundamental to interaction design. For Magic Window, that interaction is currently being tested in the context of teleconferencing, partnering with the office productivity company Steelcase. The affordances we are currently testing are what would be expected of a normal window. For example, to see what’s further to the left on the other side of the window, one would lean or move to the right side to see around the corner. Also, one would get closer to the window to see a more detail on the other side.

Leaning side to side changes the perspective of the video feed.

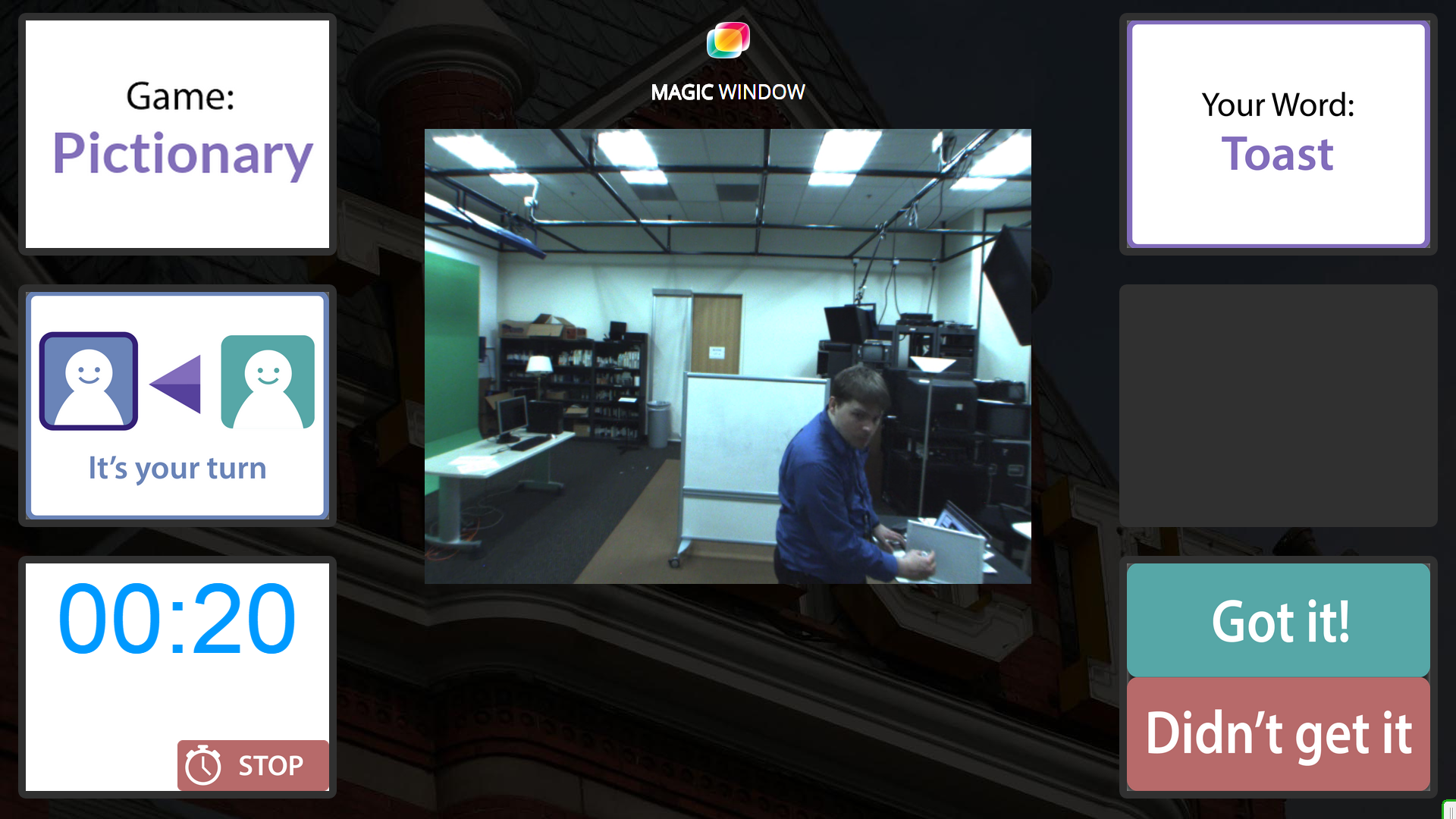

Those interactions are simulated via the Kinect’s face tracking abilities (i.e. leaning) and the perspective algorithm applied to the fisheye camera’s video feed (i.e. seeing further to one side). In addition to these interactions, there is content surrounding the video feed (see this article’s photo) based on the nature of the current use case.

Testing

For our testing with Steelcase, carried out with Georgia Tech’s Interactive Media Technology Center (IMTC), we are testing this interaction with simple games like charades and Taboo (pictured). The players on each side take turns with who has a prompt and who guesses before their synchronized timer runs out. We are observing their interaction with tracking turned on and off, as a control.

I developed this user testing scenario while improving the user interface in general. Our testing ran from March through April 2015, with results forthcoming from IMTC.

Current work

I continue to improve the core system by leading the design and front-end programming work. It is primarily written in Javascript with jQuery, and we just recently replaced bare WebRTC with the more stable and maintained PeerJS library. My next steps will be to refactor the project to be more of a starter kit rather than a configurable content platform, using AngularJS, PeerJS, and Famo.us. We also have plans to apply this to healthcare and communication between research facilities.